Earlier this century, when a friend of mine who was in the Web business asked me to join his company not as a techie but as a thinker about the Internet, I promptly proceeded to read three books that I thought could help me appreciate the power of this medium. After all, I had been totally impressed by the Open Source concept that had given us Linux and other applications that rely on the collective commitment of the global community to the common good, not private profit. As a longtime adherent of Marxist analysis, I thought the Internet was enabling a kind of progressive vision that had long been stymied by traditional politics and conventional systems. In very short order, I published review/commentaries titled “Looking for Leonardo,” “Secrets of the Bazaar,”and “The Internet Galaxy” in this magazine to share my enthusiasm for the liberating possibilities, as well as the challenges and threats, of the Internet. Years later, I added “The Second Machine Age and Its Discontents.” I was resolutely not a Luddite. I even deliberately avoided reading books or essays that questioned our seemingly digital utopia.

Until now.

In case you are wondering, the problem is not Russian or Chinese hacking, theft of personal information from databases, or President Trump’s tweets. It’s just that I started suspecting that I had been losing the power to read and concentrate because of the constant lure of the phone in my pocket and the laptop on my desk. The Internet, I felt, was invading every fiber of my consciousness, and that was a disconcerting, if not life-threatening, realization.

To understand what was happening to me, I picked up Nicholas Carr’s book The Shallows: What the Internet is Doing to our Brains, which is almost ten years old now. No sooner did I start reading the first pages that I knew I had been avoiding the bitter truth of why the Internet is a poisoned gift. For someone like me who was nurtured on the magical power of printed books, magazines, journals and newspapers, Carr’s treatise—which is, in some ways, a call for arms to save our humanity—is a total rebuke of my facile and premature hope for a medium that is more show than substance.

I quickly discovered that Carr, who majored in English at Dartmouth in the late 1970s and has dabbled proficiently in computing ever since, is the perfect guide to my conundrum. An avid reader of books, he started realizing that he was fast losing the power of concentration, growing fidgety and becoming somewhat of a scatterbrain. He was worried that he was unable to “pay attention to one thing for more than a couple of minutes” and he found out that he was not alone: English majors at elite universities were having difficulty reading whole books. The ancient culture of the book was now being eclipsed by the Internet, and no one had a clue about what brave new world would emerge in its place.

Computing is not just a technology whose value depends on the way it is used. Like smaller-scale inventions of the past—such as reading, writing, and typewriters, for instance—it affects the thought process itself. When Friedrich Nietzsche, still in his 30s, was beset by serious ailments resulting from old injuries, he was saved by a Danish-made typewriter; yet, the German philosopher realized that the machine was actually changing his style. “Our writing equipment,” he said to a colleague, “takes part in the forming of our thoughts.” Interestingly, in 1916, after T.S. Eliot had switched from writing by hand to using a typewriter, he wrote that he finds himself “sloughing off all my long sentences which I used to dote upon. Short, staccato, like modern French prose. The typewriter makes for lucidity, but I am not sure it encourages subtlety.” Such machines have a deeper effect on our self-consciousness, too. Because of digital computers, we now talk about “brain circuits” and our behavior as being “hardwired.” Our brains, research has shown, are nothing but plastic and malleable, although they do develop, through repetition, hard patterns.

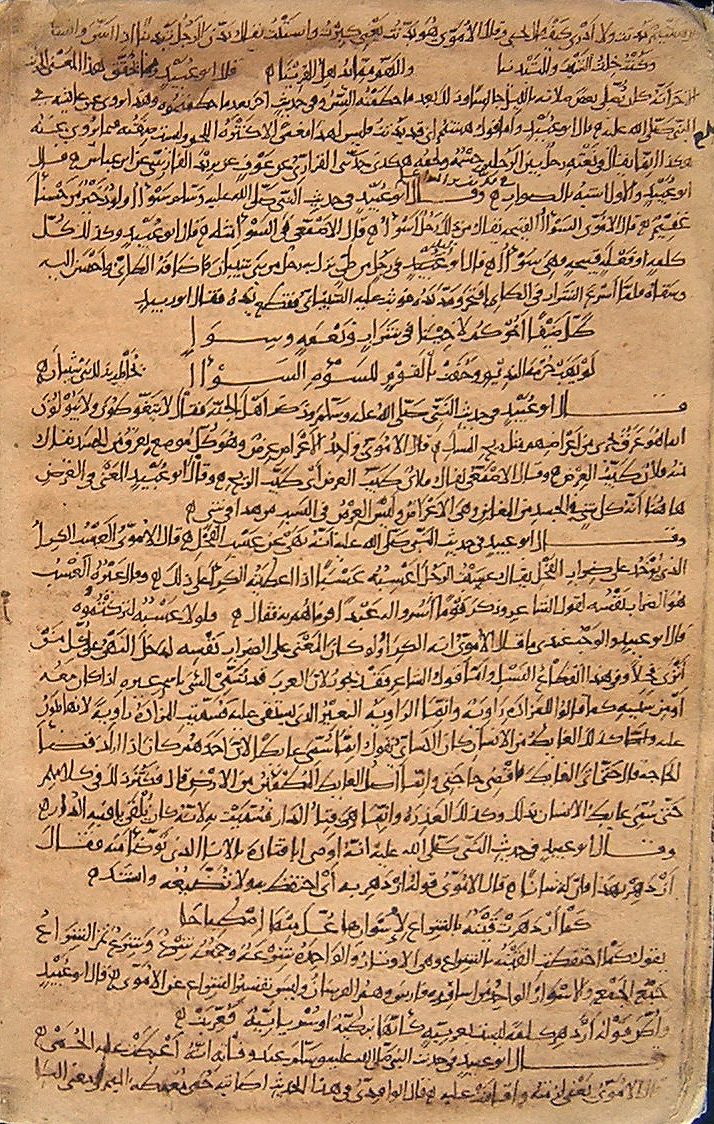

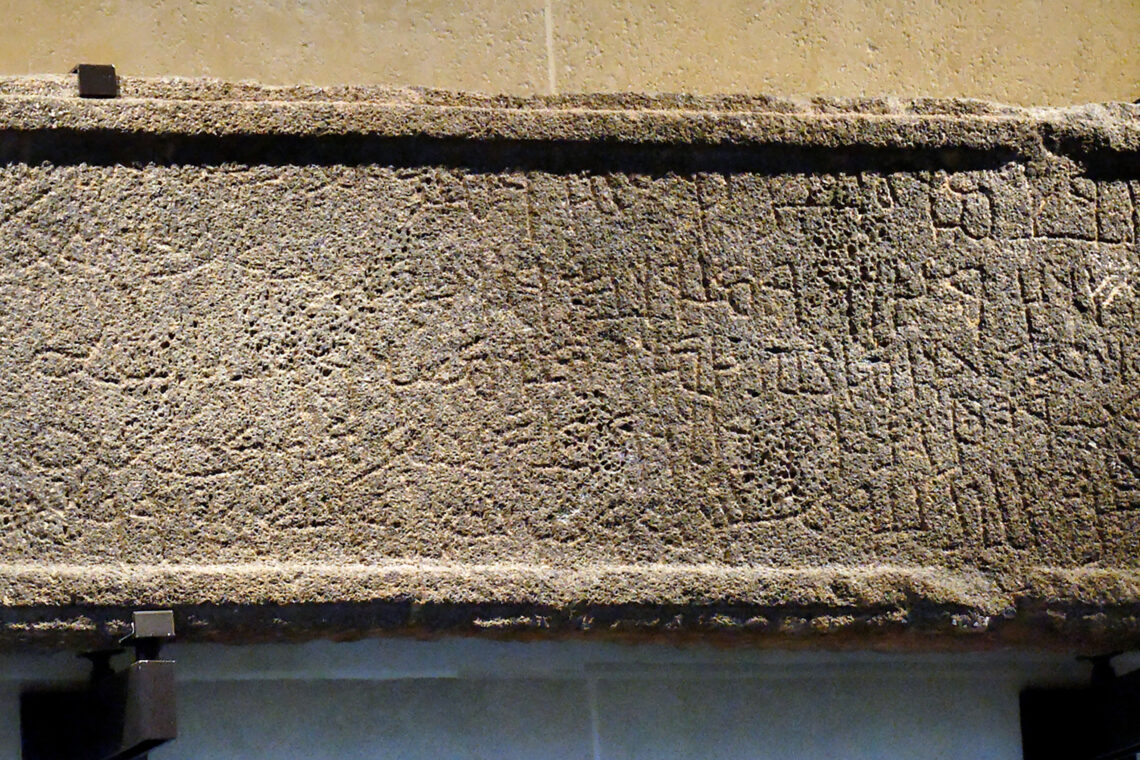

Reading and writing are also older technologies that have done much to alter our brains. Socrates, in Plato’s account Phaedrus, was wary of writing because it weakens memory. But writing itself was a work in progress—scripted, at first, as a reflection of speech (no word breaks, punctuation, etc.) until it gradually built its own grammar and syntax in the Middle Ages, which, in return, affected the way we speak and think. Reading in silence meant losing oneself in a book and being in a deep engagement with the text/author. Books gave rise to the literary brain and to a new ethic. “Quiet, solitary research became a prerequisite for intellectual achievement,” writes Carr. “Originality of thought and creativity of expression became the hallmarks of the model mind.” The invention of the printing press by the German goldsmith Johannes Gutenberg in the mid-15th century enhanced this book culture by enriching vocabularies and, therefore, expanding consciousness. New, radical and transformative ideas were published in books.

Other inventions, such as maps and clocks, also transformed our relations to space and the rhythms of everyday life. Designed for practical purposes and imposed by elites at first, they ended up shaping the human experience in irreversible ways. There is no doubt that every adopted discovery eventually upends or displaces traditional practices, but—and this is the point of Carr’s book—the world has never experienced the power of digital computing. This invention is in a category all its own.

When the British mathematician Alan Turing, famous for having cracked the Nazi wartime codes Enigma, suggested that “one digital computer” could handle all systems or processes he was, in effect, foreseeing a day when one technology could contain all previously existing technologies, whether it is maps, clocks, books, music, television, radio, etc., into one machine, basically. The computer, with its text and pages, borrowed the vocabulary of books, but produces radically different effects on users, even though they think they are reading books on their screens. With hyperlinks, search engines, and multimedia tools embedded in such texts, the reading experience consists of a series of clicks, scrolls, and distractions. Writing emails is also a far cry from writing 19th-century letters. Style and eloquence, not to mention aesthetics, are sacrificed to expediency, or worse.

In the 1970s, when the operating system called “windows” made “multitasking” easy, the culture of the solitary reader with a book gave way to the manual dexterity of the juggler. Like a mercurial god, the fast and voracious Net insists on constant attention even while it scatters the focus of its seekers by stimulating them with all sorts of trivia. It may strengthen a few mental functions, but the damage it causes to the brain is real. (Carr, at one point, was genuinely worried about not being able to read long texts and had to make a conscious decision to go on a digital fast to be able to write his book.) A scattered brain can’t think or learn deeply. Users learn to browse, skim, surf, play, and shop, but not read, reflect, and focus in the classical sense. The Net experience promises abundance but offers scarcity: In the end, the user emerges from his or her Net experience depleted. The ancient Roman philosopher Seneca summed up this contradiction well when he wrote that “to be everywhere is to be nowhere.” Prominent neuroscientists have reached the same conclusion.

When Carr was writing his book, Google had embarked on a mind-blowing project (kept secret at first) of digitizing all books to make them available anytime and anywhere; but making books “discoverable and searchable online” by mere clicks reduces the social project of bookmaking into a mere text on the screen. For Google, a book is just “another pile of data” and the brain is just another computing device that could be enhanced by the use of better algorithms. Socrates was, in a way, right: We have outsourced our memory to the Web only to impoverish our minds.

I can hear many of my friends and colleagues dismissing our fear of digital computing as a form of nostalgia for tradition, but this fear was expressed by some of the most creative pioneers of the digital age. After obtaining a doctorate in engineering from Yale in 1896, Lee de Forest invented an electronic audio amplifier called Audion and, unbeknownst to him, launched “the age of electronics.” But with time he found little to celebrate. In 1952, he published an article titled “Dawn of the Electronic Age” in Popular Mechanics to condemn the “moral depravity” of the “commercial broadcast media” as well as “the moronic quality of the majority of today’s radio programs.” De Forest’s view of the future was no less reassuring, predicting an even worse form of dehumanization through the use of electronics. “A professor,” he wrote, “may be able to implant knowledge into the reluctant brains of his 22nd-century pupils. What terrifying possibilities may be lurking there! Let us be thankful that such things are only for posterity, not for us.”

In the mid-1960s, Joseph Weizenbaum, an MIT computer scientist, wrote the software for an interactive program called ELIZA—named after the character Eliza Doolittle in George Bernard Shaw’s play Pygmalion—that became wildly popular and was even hailed by prominent psychiatrists as a promising therapeutic tool. Weizenbaum was eventually taken aback by the success of his invention and the hopes people pinned on it, so in 1976 he published Computer Power and Human Reason to warn against investing computers with human attributes at our own expense and wellbeing. He was, however, promptly dismissed by fellow computer scientists and Artificial Intelligence enthusiasts.

De Forest and Weizenbaum were humanists at heart, but their inventions worked against their vision for humanity. Technologies, like GPS, for example, degrade natural human skills even as they seem to enhance them. Reading a book on a screen is not the same as holding a book and reading from its pages. People nowadays conflate reading a book with listening to it on tape. They are expected to engage with the book, not read “with their eyes shut,” as the 19th-century author Edward Bellamy described it. In fact, listening to books on a device named the “indispensable” that would contain all printed matter was considered such a real possibility in the late 19th century that “The End of Books” was proclaimed by Scribner’s Magazine in 1894. Phonography would replace bookmaking. If books endured it’s not because of the lack of technology a century or more ago but because reading is simply not the same activity as listening.

Marshall McLuhan, author of Understanding Media: The Extension of Man, is famous for his expression “the medium is the message,” yet few know of his dark views about the dawning of the new age of “electric technology” that endangered the “American way of life” built on “Gutenberg technology”—i.e., the printed book. The medium is not just the message but also the foundation itself, as it is bound to change “patterns of perception steadily and without any resistance.” To those like David Sarnoff, “the media mogul who pioneered radio at RCA and television at NBC” and who say that technology is merely a neutral tool, McLuhan had this to say: “Our conventional response to all media, namely that is how they are used that counts, is the stance of the technological idiot.”

I chose not to Google what new developments have occurred since Carr’s hardcover book initially came out in 2010. To me, his argument is sufficient. When I wrote “Looking for Leonardo” in 2005, I ended my essay saying that “any technology that doesn’t place human welfare at its center is not worth pursuing. People have had enough of Frankensteinian schemes that belittle their value; new computing may help us fight back and restore the balance.” Now I know that not all computing, whether old or new, is good. Lee de Forest’s prediction of a future where the human brain would be amplified, or even replaced, by a chip may well be attempted in the coming decades. To think that a bunch of 20-something Americans would upend millennia of a painstakingly crafted human activity by sending the world on a fool’s errand in the digital wasteland is not a promising prospect for our civilization.

Find a good book and read it. Your life–and our future–may very well depend on it.

The position of the though and the internet

In September of 1994, I was in my way to another world. It was not my first time in the U.S. but it was my first time in a Campus. I arrived to a town in New Jersey famous by its university and its lawn. The life in the campus was so enclosed that the second year i saved my head to transcribe to my appearance the way I felt inside. In contrast with the hermetic campus, the world was opening to me in an screen. I got an .edu e-mail address. Non-international students in the master programdidn´t feel imprisioned in this elitist campus, becasue they were somewhere else. All they have to do is enter their e-mail address and a code to enter in the internet and they were saved. They were saved but sad. The percentaje of students at Princeton on Prozac (anti depressive popular in the nineties) was such that computers screen savers were made out of a picture of the box. I even took it myself for 20 days, but stop when i have a crash in a parking lot of a supermarket. Enough stupidity, I said to myself, this is not even a real accident, I cannot deal with virtuality!. Communitation Lair was the title I gave to my master thesis. I design a new program, for anonymous encounters among strangers. The project was celebrated with cynism in the school.

Two years before going to Princeton and actually what got me in was the modified version of the peep show i created.

I was interested into popular devices for meeting strangers, and I started by a commertial and popular at the time the peep show. My fascination for the peep show was in the separatión. “Together but alone”, “watch but no touch”. What I created in the legendary Madrid art gallery Buades was a private cabin for a male and a female whose actions were shown to the public in the other room of the gallery by a primitve video channel and a montor.

I got to experience how what the public nature of our privacy was quickly overwritten by the adrnaline of the encounter and the pressence of the second person. I believe all males visiting that cabin has the same experience, becasue their behavior was far from polite; quite the opposite they became more of what they were, the camera acts as a mirrow that give then the impresion that what they were doing was not fully real. After 30 seconds, they din´t care about being filmed. They were in camera, they knew it, and they enjoyed it. They were more of themselves, more attractive of discusting, more macho, they were simply more…

It was double but it was happening but it wasn´t completly real, in the sense that was orchestated. I learned that the curisotiy for people to get to know others was more important than having a fully true experience. I think that most visitors were fascinated by this controlled reality even if they have to expose themselves in a very private setting. This trick, being there but not being fully there, was for me, at the time, the direction that the world was taking. “The time expendt virtually is real time but the experience is not fully real”, I said this to myself often when I sit at my computer.

I came across this magazine searching in the internet after having a Whatsapp converstaion with my sister from Tangier.

I have a problem with the internet and is very simple: the paremeters are set. In an encounter among strangers by chance the risk is high so is the unkown nature of the outcome. In the internet things are being programmed prior to being offered. The biggest limitation in humans is to think first and to do second instead of just do. I don´t mean “doing without thinking”, as an nonsense action, I mean doing as something that one is so involved that there is no space for though. There is no space for though in real doing, though is always and after-the -fact. This change in the position of the though, before or after the action is what have change our world, and it has made us cowards.

Jana Leo

New York May 14 2019

I usually agree with you Sir, but here you are being totally dismissive of the minorities within minorities, the marginalised for centuries, the dismissed, the heretics and the dissenters. You have to be aware that there are still dark corners in the world where religion, dogma, superstition, patriarchs and misogynists rule the roost and keep not just women but men and children in perpetual terror and darkness. I’d rather that we have scattered brains than go back to an era where information was not so easily available. To say that the Internet has fake promise is to disregard the White Wednesdays, the My Stealthy Freedom movements and to dismiss the Arab Spring which gained momentum through the Internet. But for the Internet, the Paris protests, the Iran protests and the Black Rock protests would not even make a glint on the MSM…

So what if there is no sustained, deep reflection. That activity is for scholars, they can always retreat to the confines of the University or academia, just like sages used to retreat to the forests and philosophers to their solitude…

In fact, your approach to your book A Call for Heresy: Why Dissent is Vital to Islam and America is the solution for this. It is scholars who ought to step over the boundaries and present their ideas in plain speak (and this is where the Internet helps) to the common people. Me able to talk directly to you is the proof of this.

From the preface of your above-mentioned book:

“Readers familiar with my previous academic work will find a different rhetorical approach in this book. My goal here is to address scholars and general readers from any discipline, culture, religion, and (if translated) language who want to understand how Muslims and Americans are trapped in the nightmares of their own histories. Although specialized knowledge designed for fellow academics is vital for the well-being of any society, scholarship must sometimes step over the boundaries of its sheltered environment and jealously guarded conventions to reframe questions and help create better conditions for broad, democratic dialogue.”

I hope Sir, you will take this in a positive vein.

Regards

Arshia Malik

From a region that is still grappling with the realities of a post 9/11 world